This work is partially supported by National Science Council, Taiwan under grant 102- 2511-S-008-008.

© Springer-Verlag Sarawak Malaysia 2014

Named Entity Extraction via Automatic Labeling and

Tri-Training: Comparison of Selection Methods

Chien-Lung Chou and Chia-Hui Chang National Central University, Taoyuan, Taiwan

formatc.chou@gmail.com, chia@csie.ncu.edu.tw

Abstract. Detecting named entities from documents is one of the most im- portant tasks in knowledge engineering. Previous studies rely on annotated training data, which is quite expensive to obtain large training data sets, limiting the effectiveness of recognition. In this research, we propose a semi-supervised learning approach for named entity recognition (NER) via automatic labeling and tri-training which make use of unlabeled data and structured resources con- taining known named entities. By modifying tri-training for sequence labeling and deriving proper initialization, we can train a NER model for Web news ar- ticles automatically with satisfactory performance. In the task of Chinese per- sonal name extraction from 8,672 news articles on the Web (with 364,685 sen- tences and 54,449 (11,856 distinct) person names), an F-measure of 90.4% can be achieved.

Keywords: named entity extraction, co-labeling method, tri-training

1 Introduction

Named entity extraction is a fundamental task for many knowledge engineering appli- cations. Like many researches, this task relies on annotated training examples that require large amounts of manual labeling, leading to a limited number of training examples. While human-labelled training examples ( ) have high quality, the cost is very high. Therefore, most tasks for NER are limited to several thousand sentences since the high cost of labeling. For example, the English dataset for the CoNLL 2003 shared task consists of 14,987 sentences for four entity categories, PER, LOC, ORG, and MISC. But it is unclear whether sufficient data is provided for training or the learning algorithms have reached their capacity. Thus the major concern in this paper is how to prepare training data for entity extraction on the Web.

The idea in this research is to make use of existing structured databases for auto- matically labeling known entities in documents. For examples, personal names, loca- tion names, and company names can be obtained from a Who’s Who website, and accessible government data for registered organizations, respectively. Thus, we pro- pose a semi-automated method to collect data that include known named entities and

label answers automatically. While such training data may contain errors, self-testing can be applied to filter unreliable labeling with less confidence.

On the other hand, the use of unlabeled training examples ( ) has also been proved to be a promising technique for classification. For example, co-training [2] and tri- training [17] are two successful techniques that use examples with high-confidence as predicted by the other classifier or examples with consensus answers from the other two classifiers in order to prepare new labeled training data for learning. By estimat- ing the error rate of each learned classifier, we can calculate the maximum number of new consensus answers to ensure the error rates is reduced.

While tri-training has shown to be successful in classification, the application to sequence labeling is not well explored. The challenge here is to obtain a common label sequence as a consensus answer from multiple models. Because of the kd possi- ble label sequences for an unlabeled example with length d and k labels, different mechanisms can be designed to select examples for training. Another key issue with tri-training is the assumption of the initial error rate (0.5), leading to a limited number of co-labeling examples for training and early termination for large data set training.

This paper extends our previous work on semi-supervised sequence labeling based on tri-training for Chinese person name extraction [4] and compares various selection methods to explore their effectiveness in tri-training. We give the complete algorithm for the estimation of initial error rate and the choice of a label sequence with the larg- est probability. In a test set of 8,672 news articles (364,685 sentences) containing 54,449 personal names (11,856 distinct names), the semi-automatic model built on CRF (conditional random field) with 7000 known celebrity names has a performance of 86.4% F-measure and is improved to 88.9% with self-testing. We show the pro- posed tri-training improves the performance through unlabeled data to 90.4% via random selection which outperform the S2A1D (Two Agree One Disagree) approach proposed by Chen et al [3].

2 Related Work

Entity extraction is the task of recognizing named entities from unstructured text doc- uments, which is one of the information tasks to test how well a machine can under- stand the messages written in natural language and automate mundane tasks normally performed by human. The development of machine learning research from classifica- tion to sequence labeling such as the Hidden Markov Model (HMM) and the Condi- tional Random Field (CRF) [13] has been widely discussed in recent years. While supervised learning shows an impressive improvement over unsupervised learning, it requires large training data to be labeled with answers. Therefore, semi-supervised approaches are proposed as will be discussed next.

Semi-supervised learning refers to techniques that also make use of unlabeled data for training. Many approaches have been previously proposed for semi-supervised learning, including: generative models, self-learning, co-training, graph-based meth- ods [16] and information-theoretic regularization [15]. In contrast, although a number of semi-supervised classifications have been proposed, semi-supervised learning for

sequence segmentation has received considerably less attention and is designed ac- cording to a different philosophy.

Co-training and tri-training have been mainly discussed for classification tasks with relatively few labeled training examples. For example, the original co-training paper by Blum and Mitchell described experiments to classify web pages into two classes using only 12 labeled web pages as examples [2]. This co-training algorithm requires two views of the training data and learns a separate classifier for each view using labeled examples. Nigam and Ghani demonstrated that co-training performed better when the independent feature set assumption is valid [14]. For comparison, they conducted their experiments on the same (WebKB course) data set used by [2].

Goldman and Zhou relaxed the redundant and independent assumption and pre- sented an algorithm that uses two different supervised learning algorithms to learn a separate classifier from the provided labeled data [4]. Empirical results demonstrated that two standard classifiers can be used to successfully label data for each other.

Tri-Training was an improvement of Co-Training, which use three classifiers and voting mechanism to solve the confidence issue of co-labeled answers by two classi- fiers. Based on three classifiers , and ( { } ), Tri-training uses two classifiers and to label the common answers of each instance in . If the answer was not draw (equal answers) then we could trust the answer is correct and put the instance-answer pair to a collection for the -th iteration. Later we would use the union of the labeled training examples and obtained from , as train- ing data to update classifier in the next iteration.

While tri-training has been used in many classification tasks, the application in se- quence labeling task is limited. In Chen et al.’s work [3], they considered only the most probable label sequence from each model and used the agreement measure as shown in Eq. (1) to select examples for with the highest-agreement labeled sen- tences by and , and the lowest-agreement labeled sentences by and

(

S2A1D).( ) ∑ ( ) (1)

where { } is the label result by with length | | . The new training samples were chosen to be the one that is labeled by

,

ignoring the label result by . The process iterates until no more unlabeled examples are available. Thus, Chen et al.’s method does not ensure the PAC learning theory.3 System Architecture

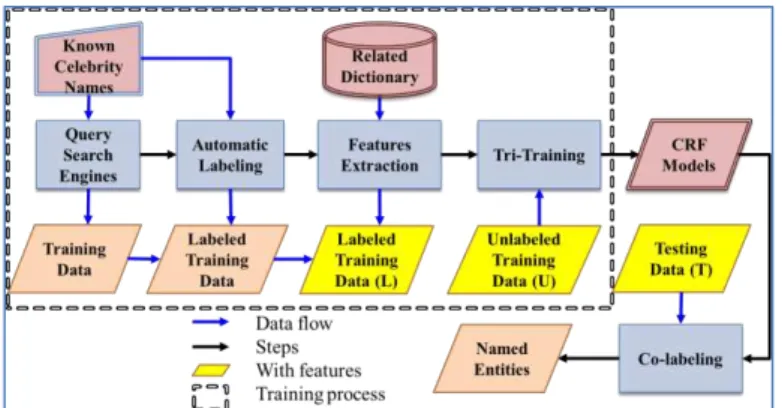

In this paper, we propose a semi-supervised learning model for named entity recogni- tion (NER). The idea of semi-supervised training comes from two aspects: the first is annotating a large amount of training examples via automatic labeling of unlabeled data using existing entity names, and the second is making use of both labeled and unlabeled data during learning via tri-training. The framework is illustrated in Fig. 1.

3.1 Tri-training for Classification

Let denote the labeled example set with size | | and denote the unlabeled exam- ple set with size | |. In each round, , tri-training uses two models, and to label the answer of each instance from unlabeled training data . If and give the same answer, then we could use and the common answer pair as newly training example, i.e. { }), for model ( { } ). To ensure that the error rate is reduced through iterations, when re-train , Eq. (2) must be satisfied,

| | | | (2)

where denotes the error rate of model in , which is estimated by and in the -th round using the labeled data by dividing the number of incorrect labeled examples by and by the number of labeled examples by and made the same label, as shown in Eq. (3).1,

|{ }|

|{ }| (3)

| | is too large, such that Eq. (2) is violated, it would be necessary to random se- lect maximum examples from such that Eq. (2) can be satisfied.

⌈ | | ⌉ (4)

{

(5)

1 Assuming that the unlabeled examples hold the same distribution as that held by the labeled ones.

Fig. 1. Semi-supervised NER based on automatic labeling and tri-training

For the last step in each round, the union of the labeled training examples and i.e. , is used as training data to re-train classifier .

3.2 Modification for Co-Labeling

The tri-training algorithm was originally designed for traditional classification and used voting strategy to solve the confidence issue. At initialization, the classifiers recognition performance was low due to less training examples. Based on this reason, the added training data from unlabeled data might contain many wrong labeled data since the voting strategy compared to specific statistical method was lower accuracy.

For sequence labeling, we need to define what should be the common labels for the instance when two models (training time) or three models (testing time) are in- volved.

In this study, we propose a new method for example selection. Assume that each model can output the best labeled sequences labels with highest probability (m = 5). Let | denote the probability that an instance has label estimated by . We select the common label with the largest probability sum, | | , by the co-labeling method. In other words, we could use and to estimate possible la- bels, then chosen the common label with the maximum probability sum to re-train

. Thus, the added set of examples, , prepared for in the -th round is defined as follows:

{ | | } (6)

where (default 0.5) is a threshold that controls the quality of the training examples provided to .

During testing, the common label for an instance is determined by three mod- els , and . We choose the final output with the largest probability sum from three models with a confidence or . If the label with the largest probability sum from three models is not greater than , then we choose the one with the larg- est probability from single model with a maximum probability. That is to say, if the label with the largest probability sum from three models is not greater than , then we choose the one with the largest probability sum from two models with a confi- dence of . The last selection criterion is the label with the maximum probability estimated by the three models as shown in Eq. (7).

{

| | | ( | | ) { } | | |

} (7)

3.3 Modification for the Initialization

According to Eq. (2), the product of error rate and new training examples define an upper bound for the next iteration. Meanwhile, | | should satisfy Eq. (8) such that

| | after subsampling, i.e., , is still bigger than | |.

| | (8)

In order to estimate the size of | |, i.e., the number of new training examples for the first round, we need to estimate , , and | | first. Zhou et al. [17] assumed a 0.5 error rate for , computed by and , and estimated the lower bound for

| | by Eq. (9), thus:

| | ⌊ ⌋ ⌊ ⌋ (9)

The problem with this initialization is that, for a larger dataset | |, such an initiali- zation will have no effect on retraining and will lead to an early stop for tri-training. For example, consider the case when the error rate is less than 0.4, then the value of | | will be no more than 5, leading to a small upper bound for | | according to Eq. (2). That is to say, we can only sample a small subset | | from for training based on Eq. (5). On the other hand, if is close to 0.5 such that the value of | | is greater than the original dataset | |, it may completely alter the behavior of .

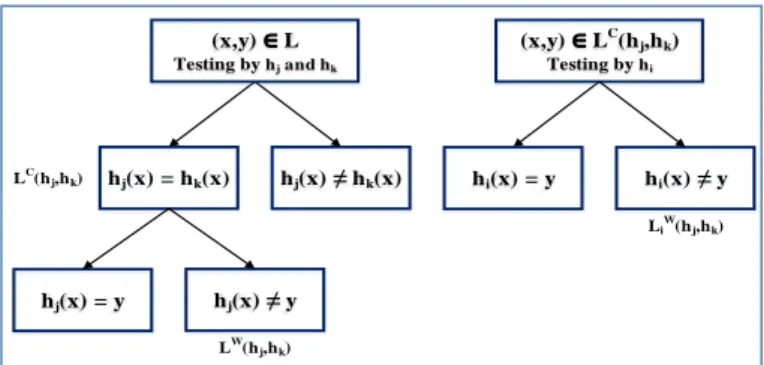

To avoid this difficulty, we propose a new estimation for the product | |. Let denote the set of labeled examples (from ) on which the classification made by is the same as that made by in the initial round, and denote the set of examples from ( ) on which both and make incorrect classifi- cation, as shown in Eq. (10) and (11). In addition, we define to be the set of examples from on which makes incorrect classification in the initial round, as shown in Eq. (12). The relationship among ( ), ( ), and

is illustrated in Fig. 2.

( ) { } (10)

( ) { } (11)

{ } (12)

Fig. 2. The relationship among Eq. (10), (11), and (12).

hj(x) hk(x) hj(x) = hk(x)

hj(x) = y hj(x) y

(x,y) LC(hj,hk) Testing by hi

hi(x) y hi(x) = y

LiW(hj,hk)

LW(hj,hk) LC(hj,hk)

(x,y) L Testing by hj and hk

By replacing | | with | | and estimation of by | ( )|

| |), we can estimate an upper bound for | | via Eq. (4). That is to say, we can compute an upper bound for | | and replace Eq. (4) by Eq. (13) to estimate the upper bound of | |, in the first round.

| | ⌈ | | ⌉ ⌈| ( | ( )| | ( )| )| ⌉ (13)

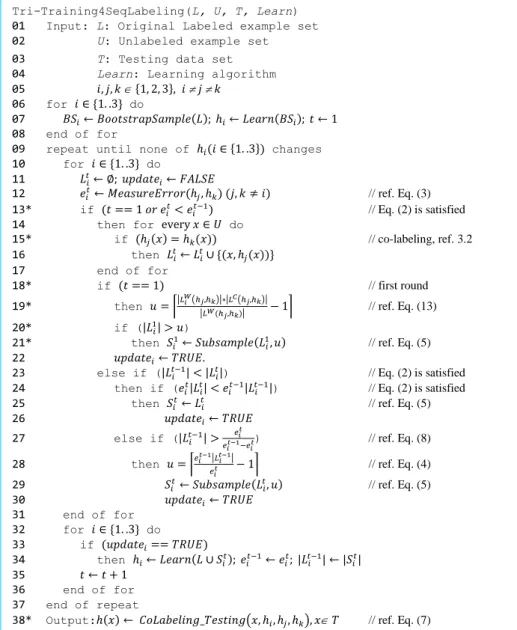

The modified algorithm for tri-training is shown in Fig. 3, where line 13 and 18-21 are modified to support the new estimation of | |, while line 15 and 38 are modified for sequence co-labeling as discussed above.

4 Experiments

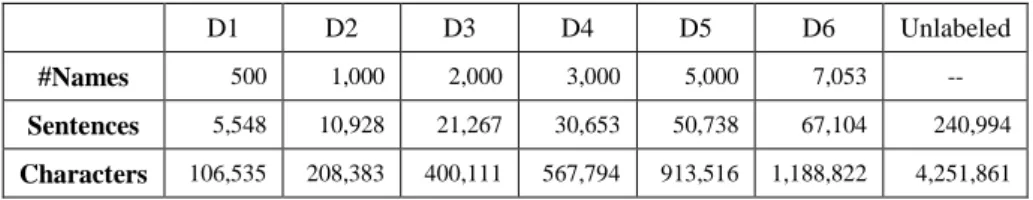

We apply our proposed approach on Chinese personal name extraction on the Web. We use known celebrity names to query search engines from four news websites (in- cluding Liberty Times, Apple Daily, China Times, and United Daily News) in Taiwan and collect the top 10 search results for sentences that contain the query keyword. We then use all known celebrity names to match extraction targets via automatic labeling. Given different numbers of personal names, we prepare six datasets by automatic labeling and consider them as labeled training examples ( ) (see Table 1).

We also fetched articles from these four news websites during 2013/01/01 to 2013/03/31 to obtain 20,974 articles for unlabeled and testing data. There is an aver- age of 35 sentences in a document. However, to increase the possibility of containing person names, we only select sentences that include common surname followed by some common first name to obtain 240,994 sentences as unlabeled data (Table 2). Finally, we manually labeled 8,672 news articles, yielding a total of 293,585 sentenc- es with 54,449 person names (11,856 distinct person names) as the testing data.

To compare with state-of-the-art NER tools, we've applied Stanford Chinese NER [5] on this testing dataset to get a baseline. We used Chinese Knowledge and Infor- mation Processing (CKIP) [11] and Stanford Word Segmenter to complete segmenta- tion task first since Stanford Chinese NER is designed to be run on word-segmented Chinese. The precision, recall, and F-measure of Stanford Chinese NER are 0.762, 0.445, and 0.562 when using CKIP, but the performance drops to 0.640, 0.373, and 0.471 when using Stanford Segmenter as shown in Table 2.

Table 1. Labeled dataset ( ) and unlabeled dataset ( ) for Chinese person name extraction

D1 D2 D3 D4 D5 D6 Unlabeled

#Names 500 1,000 2,000 3,000 5,000 7,053 --

Sentences 5,548 10,928 21,267 30,653 50,738 67,104 240,994 Characters 106,535 208,383 400,111 567,794 913,516 1,188,822 4,251,861

Tri-Training4SeqLabeling(L, U, T, Learn) 01 Input: L: Original Labeled example set 02 U: Unlabeled example set 03 T: Testing data set 04 Learn: Learning algorithm 05 { }

06 for { } do

07 08 end of for

09 repeat until none of { } changes 10 for { } do

11

12 // ref. Eq. (3) 13* if // Eq. (2) is satisfied 14 then for do

15* if // co-labeling, ref. 3.2 16 then { }

17 end of for

18* if // first round 19* then ⌈| ( )| | ( )|

| | ⌉ // ref. Eq. (13) 20* if (| | )

21* then // ref. Eq. (5) 22

23 else if (| | | |) // Eq. (2) is satisfied 24 then if ( | | | |) // Eq. (2) is satisfied 25 then // ref. Eq. (5)

26

27 else if (| | ) // ref. Eq. (8) 28 then ⌈ | | ⌉ // ref. Eq. (4) 29 // ref. Eq. (5) 30

31 end of for 32 for { } do 33 if

34 then | | | | 35

36 end of for 37 end of repeat

38* Output: ( ) // ref. Eq. (7)

Fig. 3. Pseudo Code of the proposed Tri-training for sequence labeling. Numbers with * are different from original tri-training.

Table 2. Baseline performance via Stanford Chinese NER using two segmenters Stanford Chinese NER Precision Recall F-measure

With CKIP 0.762 0.445 0.562

With Stanford Segmenter 0.640 0.373 0.471

Fourteen features were used in the experiment including common surnames, first names, job titles, numeric tokens, alphabet tokens, punctuation symbol, and common characters in front or behind personal names. The predefined dictionaries contain 486 job titles, 224 surnames, 38,261 first names, and 107 symbols as well as 223 common words in front of and behind person names. For the tagging scheme, we used BIESO tagging to mark the named entities to be extracted. We use CRF++ [9] for the follow- ing experiments. With a template involving unigram macros and the previous three tokens and behind, a total of 195 features are produced. We define precision, recall and F-measure based on the number personal names.

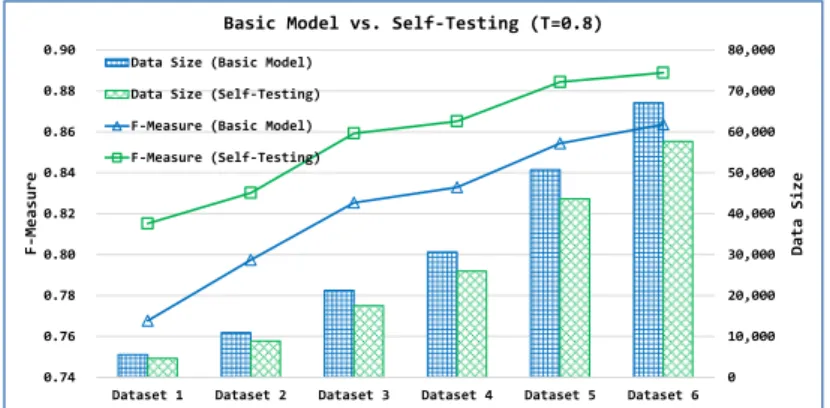

4.1 Performance of Basic Model with Automatic Labeling & Self-Testing In this section, we report the performance of the basic model using query keywords to collect and label the collected news articles with celebrity names and six reporter name patterns. While this procedure does not ensure perfect training data, it provides acceptable labelled training for semi-supervised learning. The performance is illus- trated in Fig. 4 with an F-measure of 0.768 for D1 and 0.864 for D6, showing large training data can greatly improve the performance.

Based on this basic model, we apply self-testing to filter examples with low confi- dence and retrain a new model with the set of high confidence examples. The perfor- mance is improved for all datasets with confidence levels from 0.5 to 0.9. An im- proved F-measure of 2.53% ~ 4.76% can be obtained, depending on the number of celebrity names we have (0.815 for D1 and 0.889 for D6).

4.2 Improvement of Modified Initialization for Tri-Training

Second, we show the effect of the modified initialization for tri-training (Fig. 5). With the new initialization by Eq. (13), the number of examples that can be sampled from unlabeled dataset | | is greatly increased. For dataset 1, the unlabeled data selected is five times the original data size (an increase from 4,637 to 25,234), leading to an im- provement of 2.4% in F-measure (from 0.815 to 0.839). For dataset 2, the final data

Fig. 4. Basic Model and Self-testing in testing set

0 10,000 20,000 30,000 40,000 50,000 60,000 70,000 80,000

0.74 0.76 0.78 0.80 0.82 0.84 0.86 0.88 0.90

Dataset 1 Dataset 2 Dataset 3 Dataset 4 Dataset 5 Dataset 6

DataSize

F-Measure

Basic Model vs. Self-Testing (T=0.8) Data Size (Basic Model)

Data Size (Self-Testing) F-Measure (Basic Model) F-Measure (Self-Testing)

size is twice the original data size (from 8,881 to 26,173) with an F-measure im- provement of 2.7% (from 0.830 to 0.857). For dataset 6, since | | is too large to be loaded for training with , we only use 75% for experiment. The improvement in F- measure is 1.5% (from 0.889 to 0.904). Overall, an improvement of 1.2% ~ 2.7% can be obtained with this proposed tri-training algorithm for sequence labeling.

4.3 Comparison of Selection Methods

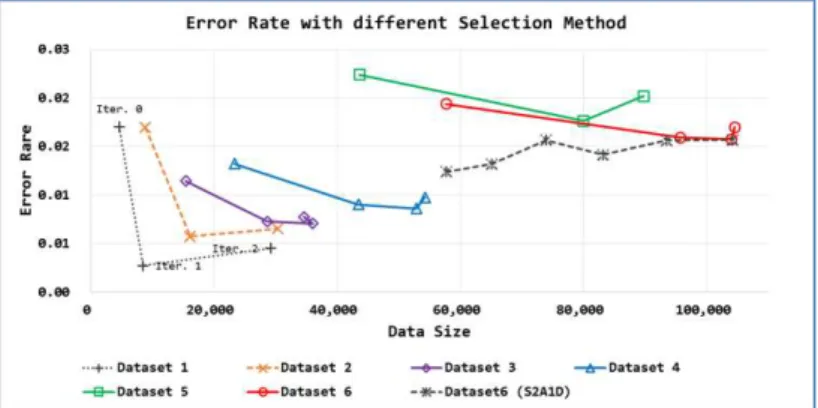

Finally, we compare Chen’s selection method with our proposed co-labeling method using error rate in the training set and F-Measure in the testing set. As shown in Fig. 6. , the proposed method based on most probable sequence stops (after 2 or 3 itera- tions) when the error rate increases for all datasets, while Chen’ selection method (S2A1D) based on S2A1D continues execution as long as there are unlabeled training examples. Thus, the error rate fluctuates (i.e. it may increase or decrease) around 0.02. In other words, the modified tri-training by Chen et al. does not follow the PAC learn- ing theory and there’s no guarantee that the performance will increase or decrease in the testing set.

Fig. 5. Performance of Tri-Training with different initialization

Fig. 6. Error Rate in the training set

0 20,000 40,000 60,000 80,000 100,000 120,000

0.80 0.82 0.84 0.86 0.88 0.90 0.92

Dataset 1 Dataset 2 Dataset 3 Dataset 4 Dataset 5 Dataset 6

DataSize

F-Measure

Tri-Training with different initialization of |L1|

Data Size (Self-Testing) Data Size (Orig Tri-Training) Data Size (New Tri-Training) F-Measure (Self-Testing) F-Measure (Orig Tri-Training) F-Measure (New Tri-Training)

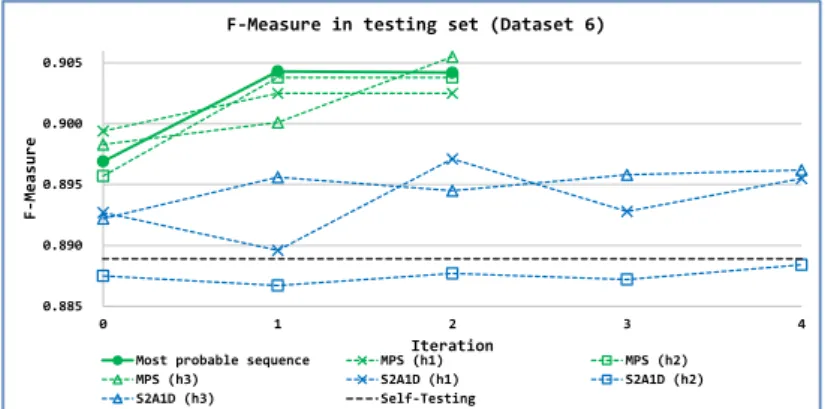

As shown in Fig. 7. , the F-Measure of the most probable sequence in testing set presents an upward trend and the three classifiers may outperform each other during different iterations. As for S2A1D, one of the classifier (h2) continues to be dominat- ed by the other two classifiers and performs worse than the baseline (self-testing). It seems the final output does not take the advantage of the three classifiers and has similar result as the baseline (self-testing). On the contrary, the proposed method could combine opinions of three classifiers and output credible label via tri-training.

5 Conclusion

In this paper, we extend the semi-supervised learning model proposed in [4] for named entity recognition. We introduced the tri-training algorithm for sequence label- ing and compare Chen et al.’s selection method based on S2A1D with the proposed method based on most probable sequence. The result shows that the proposed method retains the spirit of PAC learning and provides a more reliable method for co-labeling via most probable sequence. This most probable sequence based co-labeling method with random selection outperforms Chen et al.'s method in dataset 6 (0.904 vs. 0.890). Meanwhile, we proposed a new way to estimate the number of examples selected from unlabeled data for initialization. As shown in the experiments, the modified initialization improves 1.3%~2.3% over the original initialization.

References

1. Ando, R.K., Zhang, T.: A High-performance Semi-supervised Learning Method for Text Chunking. In: Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics (ACL '05), pp. 1-9 (2005)

2. Blum, A., Mitchell, T.: Combining Labeled and Unlabeled Data with Co-training. In: Proceedings of the Eleventh Annual Conference on Computational Learning Theory, pp. 92-100 (1998)

3. Chen, W., Zhang, Y., Isahara, H.: Chinese Chunking with Tri-training Learning. In: Proceed-ings of the 21st International Conference on Computer Processing of Oriental

Fig. 7. F-measure in the testing set across iterations

0.885 0.890 0.895 0.900 0.905

0 1 2 3 4

F-Measure

Iteration

F-Measure in testing set (Dataset 6)

Most probable sequence MPS (h1) MPS (h2)

MPS (h3) S2A1D (h1) S2A1D (h2)

S2A1D (h3) Self-Testing

Languages: Be-yond the Orient: The Research Challenges Ahead, pp. 466-473. Springer- Verlag (2006)

4. Chou, C.-L., Chang, C.-H., Wu, S.-Y.: Semi-supervised Sequence Labeling for Named Entity Extraction based on Tri-Training: Case Study on Chinese Person Name Extraction, Semantic Web and Information Extraction Workshop (SWAIE), In conjunction with COLING 2014, Aug. 24th, Dublin, Irland.

5. Finkel, J.R., Grenager, T., Manning, C.: Incorporating Non-local Information into Information Extraction Systems by Gibbs Sampling. In: Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics, pp. 363-370. Association for Computational Linguistics, (2005)

6. Goldman, S.A., Zhou, Y.: Enhancing Supervised Learning with Unlabeled Data. In: Proceedings of the Seventeenth International Conference on Machine Learning (ICML '00), pp. 327-334 (2000)

7. Grandvalet, Y., Bengio, Y.: Semi-supervised Learning by Entropy Minimization. In: CAP, pp. 281-296. PUG, (2004)

8. Jiao, F., Wang, S., Lee, C.-H., Greiner, R., Schuurmans, D.: Semi-supervised Conditional Random Fields for Improved Sequence Segmentation and Labeling. In: Proceedings of the 21st International Conference on Computational Linguistics and the 44th Annual Meeting of the Association for Computational Linguistics (ACL-44), pp. 209-216 (2006)

9. Kudo, T.: CRF++: Yet Another CRF toolkit, http://crfpp.googlecode.com

10. Li, W., McCallum, A.: Semi-supervised Sequence Modeling with Syntactic Topic Models. In: Proceedings of the 20th National Conference on Artificial Intelligence - Volume 2 (AAAI '05), pp. 813-818 (2005)

11. Ma, W.-Y., Chen, K.-J.: Introduction to CKIP Chinese Word Segmentation System for the First International Chinese Word Segmentation Bakeoff. In: Proceedings of the Second SIGHAN Workshop on Chinese Language Processing - Volume 17, pp. 168-171. Association for Computational Linguistics, (2003)

12. Mann, G.S., McCallum, A.: Generalized Expectation Criteria for Semi-Supervised Learning with Weakly Labeled Data. J. Mach. Learn. Res. 11, 955-984 (2010)

13. McCallum, A., Li, W.: Early Results for Named Entity Recognition with Conditional Random Fields, Feature Induction and Web-enhanced Lexicons. In: Proceedings of the Seventh Conference on Natural Language Learning HLT-NAACL 2003 - Volume 4 (CONLL'03), pp. 188-191 (2003)

14. Nigam, K., Ghani, R.: Analyzing the Effectiveness and Applicability of Co-training. In: Proceedings of the Ninth International Conference on Information and Knowledge Management (CIKM '00), pp. 86-93 (2000)

15. Zheng, L., Wang, S., Liu, Y., Lee, C.-H.: Information Theoretic Regularization for Semi- supervised Boosting. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD '09), pp. 1017-1026 (2009)

16. Zhou, D., Huang, J., Schö, l., Bernhard: Learning from Labeled and Unlabeled Data on a Directed Graph. In: Proceedings of the 22Nd International Conference on Machine Learning (ICML '05), pp. 1036-1043. ACM (2005)

17. Zhou, Z.-H., Li, M.: Tri-Training: Exploiting Unlabeled Data Using Three Classifiers. IEEE Trans. on Knowl. and Data Eng. 17, 1529-1541 (2005)