Mixture of Gaussians

Sargur Srihari

srihari@cedar.buffalo.edu

Mixtures of Gaussians

• Also Called as Finite Mixture Models (FMMs)

∑

=

Σ

=

K

k

k k

k

N x

p

1

)

,

|

(

)

x

( π µ

Goal: Determine the three sets of parameters using maximum likelihood

Gaussian Mixture Model

• A linear superposition of Gaussian components

• Provides a richer class of density models than

the single Gaussian

• GMM are formulated in terms of discrete latent

variables

– Provides deeper insight – Motivates EM algorithm

• Which gives a maximum likelihood solution to no. of components and their means/covariances

GMM Formulation

• Linear superposition of K Gaussians:

• Use 1-of-K representation for latent variable z

– Let z = z1,..,zK whose elements are

– There are K possible states of z corresponding to K components

• Define joint distribution p(x,z)=p(x|z)p(z)

∑

=

Σ

=

K

k

k k

k

N x

p

1

)

,

|

(

)

x

( π µ

marginal conditional

zk ∈{0,1} and zk = 1

k

∑

p(z)

• We need to associate a probability with each

component k

• Denote where parameters

satisfy

• Because z uses 1-of-K it follows that

– since components are mutually exclusive and hence are independent

1

(z)

kK

z k k

p π

=

= ∏

p(z

k= 1) = π

k0 ≤ π

k ≤ 1 and πk = 1 k

∑

With one component p(z1) = π1z1

With two components p(z1, z2) = π1z1π2z2

Conditional distribution p(x|z)

• For a particular component (value of z)

• Thus p(x|z) can be written in the form

• Thus marginal distribution of x is obtained by summing over all possible states of z

• This is the standard form of a Gaussian mixture

p(x | z) = N x |( µk,Σk)zk

k=1 K

∏

p(x) = p(z) p(x | z) = πkzk

k=1 K

∏

N x |( µk,Σk)zkz

∑

= πkN x |( µk,Σk)k=1 K

∑

z

∑

p(x | zk = 1) = N (x | µk, Σk )

Due to the exponent zk all product terms except for one equal one

7

Latent Variable

• If we have observations x1,..,xN

• Because marginal distribution is in the form

– It follows that for every observed data point xn there is a corresponding latent vector zn , i.e., its sub-class

• Thus we have found a formulation of Gaussian mixture involving an explicit latent variable

– We are now able to work with joint distribution p(x,z) instead of marginal p(x)

• Leads to significant simplification through introduction of expectation maximization

p(x) = p(x,z)

z

∑

Another conditional probability (Responsibility)

• In EM p(z |x) plays a role

• The probability p(zk=1|x) is denoted

• From Bayes theorem

• View as prior probability of component k as the posterior probability

it is also the responsibility that component k takes for explaining the observation x

γ(zk) ≡ p(zk = 1 | x) = p(zk = 1) p(x | zk = 1) p(zj = 1) p(x | z j = 1)

j=1 K

∑

= πkN(x |µk,Σk) πjN(x |µk,Σj)

j=1 K

∑

γ (zk )

γ (zk ) = p(zk = 1| x)

p(zk = 1) = πk

Plan of Discussion

• Next we look at

1. How to get data from a mixture model synthetically and then

2. How to estimate the parameters from the data

Synthesizing data from mixture

• Use ancestral sampling

– Start with lowest numbered node and draw a sample,

• Generate sample of z, called z^

– move to successor node and draw a sample given the parent value, etc.

• Then generate a value for x from conditional p(x|z^)

• Samples from p(x,z) are plotted

according to value of x and colored with value of z

• Samples from marginal p(x)

obtained by ignoring values of z

500 points from three Gaussians

Complete Data set

Incomplete Data set

11

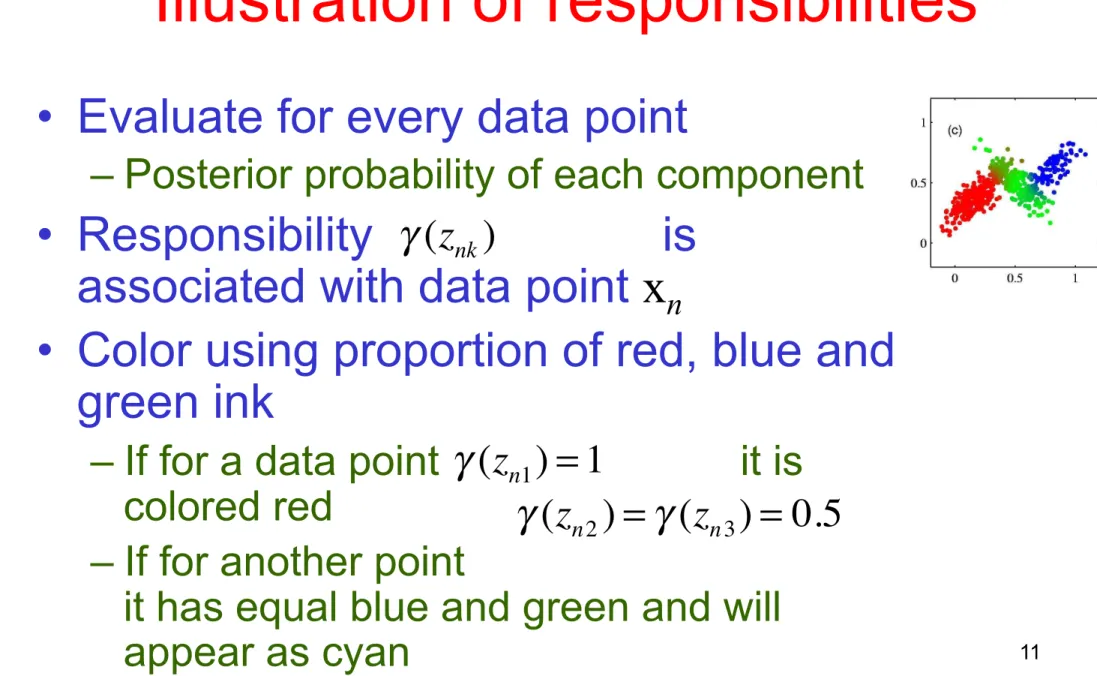

Illustration of responsibilities

• Evaluate for every data point

– Posterior probability of each component

• Responsibility is

associated with data point x

n• Color using proportion of red, blue and

green ink

– If for a data point it is colored red

– If for another point it has equal blue and green and will

appear as cyan

γ (znk )

γ (zn1) = 1

γ (zn2) = γ (zn3) = 0.5

Maximum Likelihood for GMM

• We wish to model data set {x1,..xN} using a mixture of Gaussians (N items each of dimension D)

• Represent by N x D matrix X

– Nth row is given by xnT

• Represent N latent variables with N x K matrix Z with rows znT

• Bayesian Network for p(x,z) is

We are given N i.i.d. samples {xn} with unknown latent points {zn} with parameters πn.

The samples have parameters: mean µ and covariance Σ

Goal is to estimate the three sets of parameters

X = x1 x2 xn

"

#

$ $

$

$

%

& ' ' ' '

Z = z1

z2

zn

"

#

$

$

$

$

%

& ' ' ' '

Likelihood Function for GMM

13

p(x) = p(z) p(x | z) = πkN(x |µk,Σk)

k=1 K

∑

z

∑

Therefore Likelihood function is

p(X |π,µ,Σ) =

k=1

∑

K πkN(xn |µk,Σk)⎧ ⎨

⎩

⎫ ⎬

n=1 ⎭

∏

NTherefore log-likelihood function is

ln p(X |π,µ,Σ) = ln

k=1

∑

K πkN(xn |µk,Σk)⎧ ⎨

⎩

⎫ ⎬

n=1 ⎭

∑

NWhich we wish to maximize

A more difficult problem than for a single Gaussian

Mixture density function is

Summation is for

Marginalizing the joint

Product is over the N i.i.d. samples

Maximization of Log-Likelihood

• Goal is to estimate the three sets of

parameters

• Before proceeding with the m.l.e. briefly

mention two technical issues:

– Problem of singularities

– Identifiability of mixtures 14

ln p(X | π , µ , Σ) = ln

k=1

∑

Kπ

kN (x

n| µ

k, Σ

k)

⎧ ⎨

⎩

⎫ ⎬

⎭

n=1

∑

Nπ , µ , Σ

15

• Consider Gaussian mixture

– components with covariance matrices

• Data point that falls on a mean will contribute to the likelihood function

• As term goes to infinity

• Therefore maximization of log-likelihood is not well-posed

– Interestingly, this does not happen in the case of a single Gaussian

• Multiplicative factors go to zero

– Does not happen in the Bayesian approach

• Problem is avoided using heuristics

– Resetting mean or covariance

2

1/2

1 1

(x | x , )

n n j (2 )

j

N σ I

π σ

=

One component assigns finite values and other to large value

Multiplicative values Take it to zero

σ j → 0

Σk = σk 2I

µj = xn

Problem of Identifiability

A K-component mixture will have a total of K! equivalent solutions

– Corresponding to K! ways of assigning K sets of parameters to K components

• E.g., for K=3 K!=6: 123, 132, 213, 231, 312, 321

– For any given point in the space of parameter values there will be a further K!-1 additional points all giving exactly same distribution

• However any of the equivalent solutions is as good as the other

A

B

C B

A C Two ways of labeling three Gaussian subclasses

A density p(x |θ) is identifiable if θ ≠θ ' then there is an x for which p(x |θ) ≠ p(x |θ')

EM for Gaussian Mixtures

• EM is a method for finding maximum likelihood solutions for models with latent variables

• Begin with log-likelihood function

• We wish to find that maximize this quantity

• Take derivatives in turn w.r.t

– Means and set to zero

– covariance matrices and set to zero – mixing coefficients and set to zero

ln p(X |π,µ,Σ) = ln

k=1

∑

K πkN(xn |µk,Σk)⎧ ⎨

⎩

⎫ ⎬

n=1 ⎭

∑

Nπ , µ , Σ

µk

Σ

kπ

k18

EM for GMM: Derivative wrt

• Begin with log-likelihood function

• Take derivative w.r.t the means and set to zero

– Making use of exponential form of Gaussian – Use formulas:

– We get

the posterior probabilities

ln p(X |π,µ,Σ) = ln

k=1

∑

K πkN(xn |µk,Σk)⎧ ⎨

⎩

⎫ ⎬

n=1 ⎭

∑

N

0 = πkN(xn | µk,Σk) π jN(xn | µj,Σj)

∑

j n=1N

∑

(xn − µkk

∑

−1 )d

dx ln u = u'

u

and d dx

eu = euu'

Inverse of covariance matrix

µ

kµ

kγ (z )

M.L.E. solution for Means

• Multiplying by (assuming non-

singularity)

• Where we have defined

– Which is the effective number of points assigned to cluster k

1

1 ( )x

N

k nk n

k n

z

µ N γ

=

=

∑

Mean of kth Gaussian component is the weighted mean of all the points in the data set:

where data point xn is weighted by the posterior probability that

component k was responsible for generating xn

Nk = γ(znk)

n=1 N

∑

Σ

kM.L.E. solution for Covariance

• Set derivative wrt to zero

– Making use of mle solution for covariance matrix of single Gaussian

– Similar to result for a single Gaussian for the data set but each data point weighted by the corresponding posterior probability

– Denominator is effective no of points in component

Σk = 1

Nk γ(znk)(xn − µk)(xn − µk)

T n=1

N

∑

Σ

kM.L.E. solution for Mixing Coefficients

• Maximize w.r.t.

– Must take into account that mixing coefficients sum to one

– Achieved using Lagrange multiplier and maximizing

– Setting derivative wrt to zero and solving gives

k k

N

π

= Nln p(X |π,µ,Σ) + λ πk −1

k=1

∑

K⎛

⎝ ⎜ ⎞

⎠ ⎟

ln p(X | π , µ , Σ) π

kπ

kSummary of m.l.e. expressions

• GMM maximum likelihood estimates

22 1

1 ( )x

N

k nk n

k n

z

µ N γ

=

=

∑

Σk = 1

Nk γ(znk)(xn − µk)(xn − µk)

T n=1

N

∑

k k

N

π

= N Nk = γ(znk) n=1N

∑

Parameters (means)

Parameters(covariance matrices)

Parameters

(Mixing Coefficients)

All three are in terms of responsibliities

EM Formulation

• The results for are not closed form

solutions for the parameters

– Since the responsibilities depend on those parameters in a complex way

• Results suggest an iterative solution

• An instance of EM algorithm for the

particular case of GMM

µk, Σk,πk γ (znk )

Informal EM for GMM

• First choose initial values for means, covariances and mixing coefficients

• Alternate between following two updates

– Called E step and M step

• In E step use current value of parameters to evaluate posterior probabilities, or

responsibilities

• In the M step use these posterior probabilities to to re-estimate means, covariances and mixing coefficients

EM using Old Faithful

Data points and Initial mixture model

Initial E step Determine responsibilities

After first M step

Re-evaluate Parameters

After 2 cycles After 5 cycles After 20 cycles

Comparison with K-Means

K-means result E-M result

Animation of EM for Old Faithful Data

• http://en.wikipedia.org/wiki/

File:Em_old_faithful.gif

#initial parameter estimates (chosen to be deliberately bad) theta <- list( tau=c(0.5,0.5),

mu1=c(2.8,75), mu2=c(3.6,58),

sigma1=matrix(c(0.8,7,7,70),ncol=2), sigma2=matrix(c(0.8,7,7,70),ncol=2) ) Code in R

Practical Issues with EM

• Takes many more iterations than K-means

• Each cycle requires significantly more

comparison

• Common to run K-means first in order to

find suitable initialization

• Covariance matrices can be initialized to

covariances of clusters found by K-means

• EM is not guaranteed to find global

maximum of log likelihood function

Summary of EM for GMM

• Given a Gaussian mixture model

• Goal is to maximize the likelihood function

w.r.t. the parameters (means, covariances

and mixing coefficients)

Step1: Initialize the means , covariances

and mixing coefficients and evaluate

initial value of log-likelihood

µ

kΣ

kπ

kEM continued

• Step 2: E step: Evaluate responsibilities using current parameter values

• Step 3: M Step: Re-estimate parameters using current responsibilities

1

(x | µ , ) ( )=

(x | µ , ))

k k k

k K

j j j

j

z N

N γ π

π

=

Σ

∑ Σ

where

Σknew = 1

Nk γ(znk)(xn −µk

new)(xn −µknew)T

n=1 N

∑

µknew = 1

Nk γ(znk)xn

n=1 N

∑

Nk = γ(znk)

n=1 N

∑

πk

new = Nk N

EM Continued

• Step 4: Evaluate the log likelihood

– And check for convergence of either parameters or log likelihood

• If convergence not satisfied return to Step 2

ln p(X |π,µ,Σ) = ln

k=1

∑

K πkN(xn |µk,Σk)⎧ ⎨

⎩

⎫ ⎬

n=1 ⎭